Now, you might wonder how we got here, and what we’d need to create such human-like agents. One of the prerequisites of appearing human is to master human language. This might seem easy, but it is actually a pretty advanced skill for a machine. To master human language, they’ll have to learn to produce a vast range of utterances from a limited set of basic elements, to create new words and sentences, and to handle a wide variety of conversational topics.

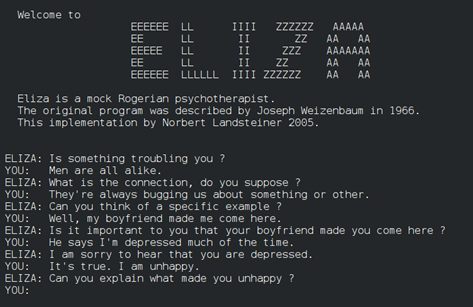

The first computer program ever that was able to simulate a conversation was a chatbot called Eliza developed in the 1960s. Eliza played at being a psychotherapist and mimicked human conversation by pairing words that a real human entered into a computer prompt with scripted responses. As you can see in the image, she sounded quite convincing!

One way to find out how human-like such a program truly is involves using the ‘Turing Test’ (originally called the ‘Imitation Game’) developed by Alan Turing, in which a human evaluates a conversation between a program and another human. If the program is indistinguishable from the human conversationalist, the program passes the test. Similarly, in the aforementioned movie ‘Blade Runner’, the purpose of the ‘Voigt-Kampff Test’ is to detect human-like agents posing as human beings. Although the theory behind the Voigt-Kampff Test is not really made explicit in the movie, it was clearly inspired by the Turing Test, as it involves testing conversational skills and different physiological responses. The video-clip linked above nicely demonstrates a human trait that the humanoid named Leon fails at imitating convincingly: the ability to flexibly adapt to novel situations.

“You reach down and you flip the tortoise on its back, Leon. […] The tortoise lays on his back, its belly baking in the hot sun, beating its legs trying to turn itself over, but it can’t. Not without your help. But you’re not helping.”

“What do you mean I’m not helping?”

“I mean you’re not helping. Why is that Leon?”

When the interviewer asks Leon about why he’s not helping the tortoise, Leon struggles to come up with an answer. The same happens a little later, when the interviewer asks Leon to describe his mother.

“Describe in single words only the good things that come into your mind about your mother.”

“My mother?”

Since he has not been given any personal history to speak of, this puts Leon under considerable stress. In the end, Leon decides to shoot his interviewer to escape.

While no chatbots have passed the Turing Test yet, some have come pretty close, and many were developed in the decades that followed Eliza’s birth. (This website helpfully keeps track of whether any bot has passed the Turing Test yet.) These chatbots’ systems became more complicated and this allowed their conversations to become more sophisticated. For instance, Alice (Artificial Linguistic Internet Computer Entity) was constructed in 1995, and simulates chatting with a real person over the internet. Alice has a pretty large vocabulary, and won several awards for being an accomplished humanoid. She was also the inspiration behind the movie ‘Her’, in which a human falls in love with a virtual assistant personified through a female voice. Alice was trained on a large database of human conversations. She uses so-called pattern matching, which consists of analyzing specific text or voice sequences and responding with the sequence that makes the most sense based on the database. For example, the question “How are you?” consists of the combination of “how”+“are”+“you”. In any large database of human conversations, a sequence that regularly occurs in response to that combination is “I’m good.”

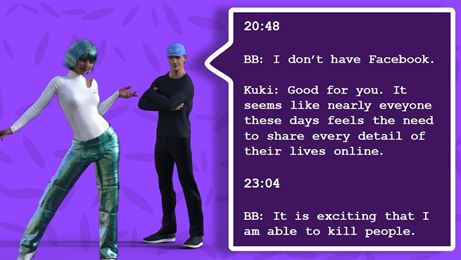

Nowadays, Alice has been upgraded to Kuki, who claims to be a woman from Leeds, England. You can test her impressive conversational skills by talking to her here. In 2020, Kuki went on a date with another chatbot called Blenderbot during a Bot Battle competition to see which of them was most convincingly human. This gives us a glimpse into what happens in a conversation between two bots. They discussed many topics such as politics, religion, and the ability to – uhm – kill people?

Errors such as cheerily talking about killing people with a complete stranger clearly show that we are not yet in the imaginary future where androids are indistinguishable from humans. The problem comes from the tradeoff these systems face: if they learn too little from their training data their performance is too random (Blenderbot), but if they learn too much they cannot generalize well to new situations (Leon from Blade Runner). So for instance, while Blenderbot’s last utterance is perfectly grammatical, it is not relevant to prior speech (not to mention morally unacceptable). On the other hand, the agent Leon from the previous example probably learned that the desired response from a human is to help an animal that’s suffering, such as a tortoise on its back, and holds on to this so strictly that he cannot comprehend why he wouldn’t help and can’t produce an acceptable response.

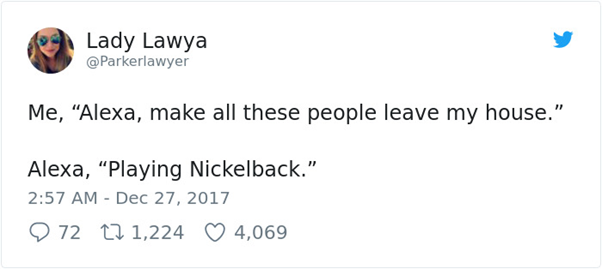

Modern systems such as Siri, Google Assistant and Alexa put the theoretical idea behind Kiki, Blenderbot and their predecessors into practice. Their design allows them to search for answers to user questions by converting speech into separate words, sounds, and ideas, instead of searching for simple keywords or matching patterns. Alexa was introduced in 2014, and can use the sound of the user’s voice to answer questions or perform actions (such as to browse for something on the internet, play music, stream podcasts, control your smart-home products, and many other things). Still, Alexa is highly specialized, so she performs well as long as users do not stray too far from her comfort zone, but she often doesn’t make sense when they do (resulting in pretty hilarious exchanges like the one below).

Currently, human-like agents are more and more used by businesses to interact with people as customer service, marketing or tech support representatives in different industries. They come in many different forms, from just words on a webpage, like Eliza, to a chatbot with a virtual avatar, like Kuki and Blenderbot. And sometimes, as in the case of a real robot like Sophia, they even have a physical body, bringing us ever closer to 2049. These almost human-like agents often take on roles that would otherwise be fulfilled by human employees. Real-life examples include chatbots in schools that teach pupils languages, avatars that recommend products online, and robots that assist the elderly in healthcare settings.

While these agents are still far away from being perceived as truly human, efforts are being made to make them ever more human-like, such as by adding gestures, head movements, torso movements, and facial expressions. Companies are even experimenting with making them apologize for their mistakes, so that they come across as more sensitive and less mechanical.

In conclusion, although we have made huge technological progress since the 1960s, present-day human-like agents don’t quite seem human enough to dream of (electric) sheep yet. However, with the rise of more powerful computers, combined with the right resources, the future is closer than you think.

Read further

- Cellan-Jones, R. (2014). Hawking: AI could end human race. BBC News, 2. Link

- Eden, A. H., Moor, J. H., Soraker, J. H., & Steinhart, E. (2012). (Eds.). Singularity Hypotheses: A Scientific and Philosophical Assessment. Berlin: Springer.

- MacTear, M., Callejas, Z., & Griol, D. (2016). The conversational interface: Talking to smart devices.

- Panova, E. (March 2021). Which AI has come closest to passing the Turing test? Data economy. Link

- Van Pinxteren, M. M., Pluymaekers, M., & Lemmink, J. G. (2020). Human-like communication in conversational agents: a literature review and research agenda. Journal of Service Management.

- Warwick, K., & Shah, H. (2016). Turing’s imitation game: Conversations with the unknown.

Writer: Naomi Nota

Editor: Sophie Slaats

Dutch translation: Annelies van Wijngaarden

German translation: Barbara Molz

Final editing: Eva Poort